Publications

Hao Sun, Fenggen Yu, Huiyao Xu, Tao Zhang, Changqing Zou.

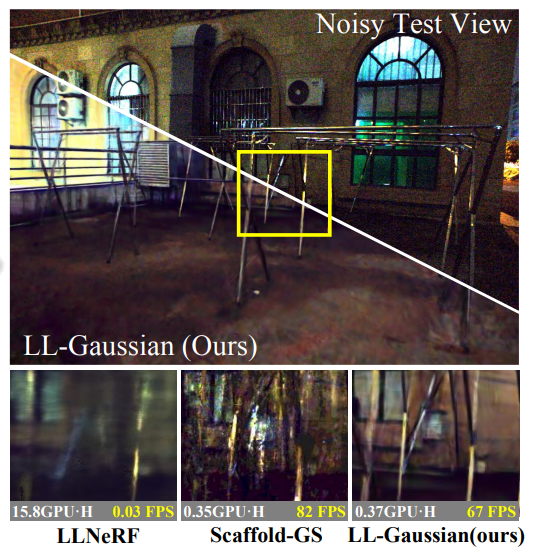

LL-Gaussian: Low-Light Scene Reconstruction and Enhancement via Gaussian Splatting for Novel View Synthesis.

[Paper], Accepted to [ACM MM 2025].

We propose LL-Gaussian, a novel framework for 3D reconstruction and enhancement from low-light sRGB images, enabling pseudo normal-light novel view synthesis.

Xiao Han, Runze Tian, Yifei Tong, Fenggen Yu, Dingyao Liu, Yan Zhang.

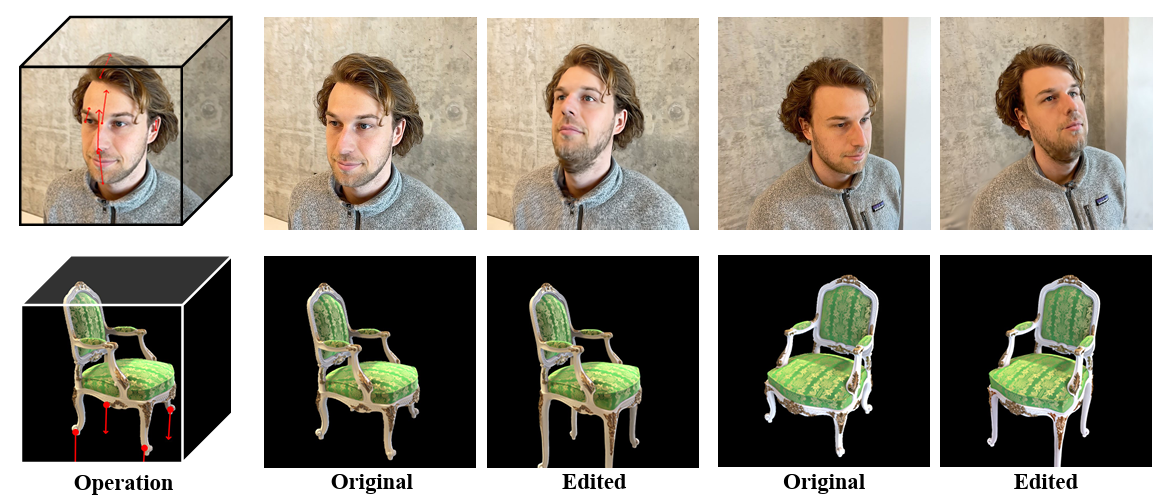

ARAP-GS: Drag-driven As-Rigid-As-Possible 3D Gaussian Splatting Editing with Diffusion Prior.

[Paper], Accepted to [IEEE ICASSP 2026].

We introduce ARAP-GS, a drag-driven 3DGS editing framework based on As-Rigid-As-Possible (ARAP) deformation. Unlike previous 3DGS editing methods, we are the first to apply ARAP deformation directly to 3D Gaussians, enabling flexible, drag-driven geometric transformations.

Yifei Tong, Runze Tian, Xiao Han, Dingyao Liu, Fenggen Yu, Yan Zhang.

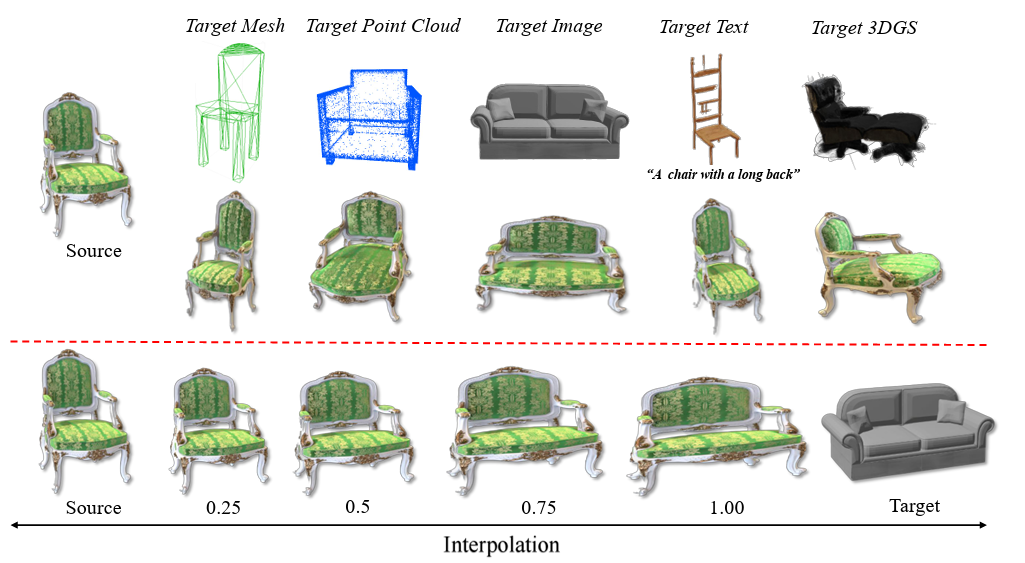

CAGE-GS: High-fidelity Cage Based 3D Gaussian Splatting Deformation.

[Paper]

We introduce CAGE-GS, a cage-based 3DGS deformation method that seamlessly aligns a source 3DGS scene with a user-defined target shape. Our approach learns a deformation cage from the target, which guides the geometric transformation of the source scene.

Fenggen Yu, Yiming Qian, Xu Zhang, Francisca Gil-Ureta, Brian Jackson, Eric Bennett, and Hao(Richard) Zhang.

DPA-Net: Structured 3D Abstraction from Sparse Views via Differentiable Primitive Assembly.

[Paper], Accepted to [ECCV 2024].

We present a differentiable rendering framework to learn structured 3D abstractions in the form of primitive assemblies from sparse RGB images capturing a 3D object.

Mingrui Zhao, Yizhi Wang, Fenggen Yu, and Ali Mahdavi-Amiri.

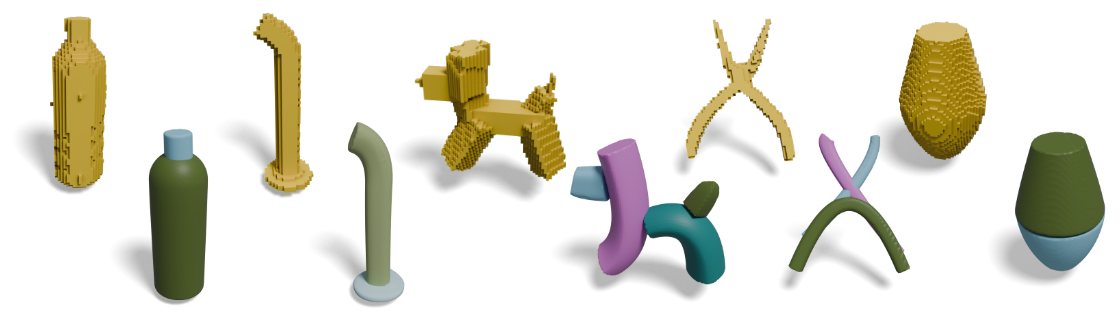

SweepNet: Unsupervised Learning Shape Abstraction via Neural Sweepers.

Accepted to [ECCV 2024].

Sweep surfaces, commonly found in human-made objects, aid in this process by effectively capturing and representing object geometry, thereby facilitating abstraction. In this paper, we introduce SweepNet, a novel approach to shape abstraction through sweep surfaces.

Ruiqi Wang, Akshay Gadi Patil, Fenggen Yu, and Hao(Richard) Zhang.

Coarse-to-Fine Active Segmentation of Interactable Parts in Real Scene Images.

[Paper], Accepted to [ECCV 2024].

We introduce the first active learning (AL) framework for high-accuracy instance segmentation of dynamic, interactable parts from RGB images of real indoor scenes.

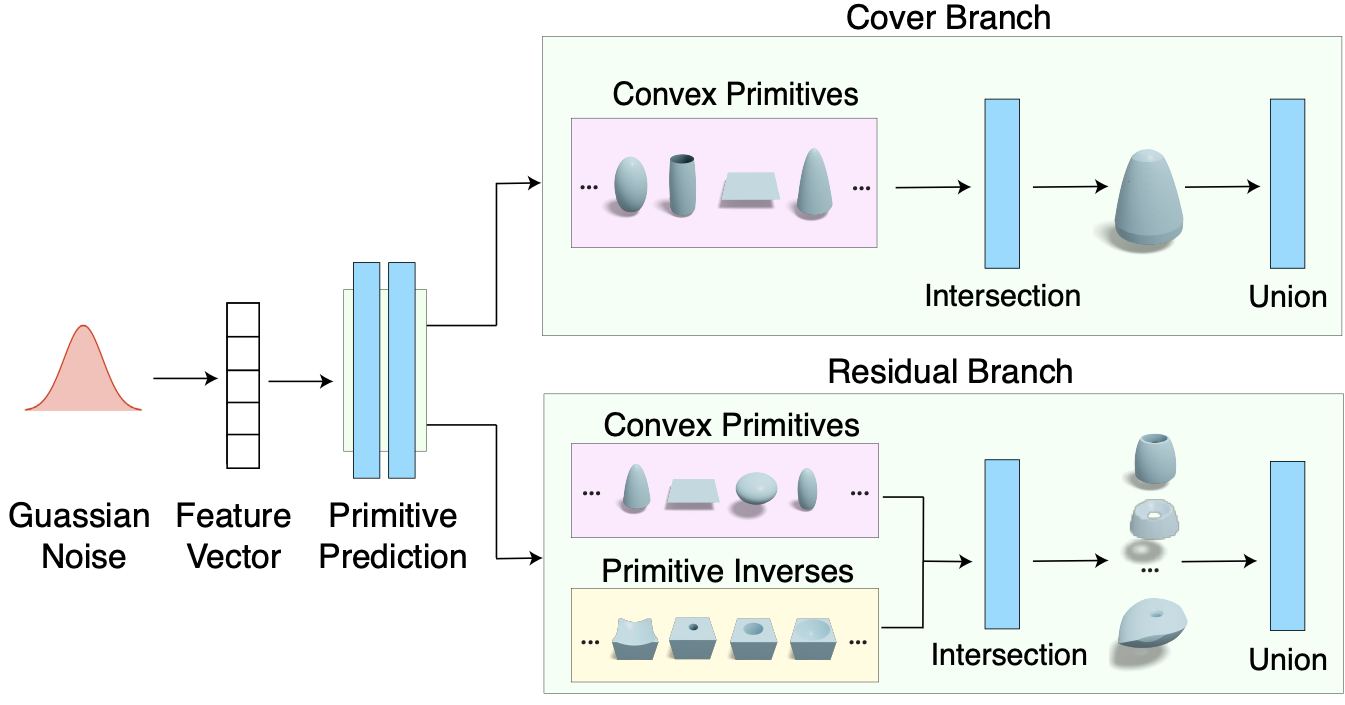

Fenggen Yu, Qimin Chen, Maham Tanveer, Ali Mahdavi-Amiri, and Hao(Richard) Zhang.

D2CSG: Unsupervised Learning of Compact CSG Trees with Dual Complements and Dropouts.

[Paper], Accepted to [NeurIPS 2023].

We present D2CSG, a neural model composed of two dual and complementary network branches, with dropouts, for unsupervised learning of compact constructive solid geometry (CSG) representations of 3D CAD shapes.

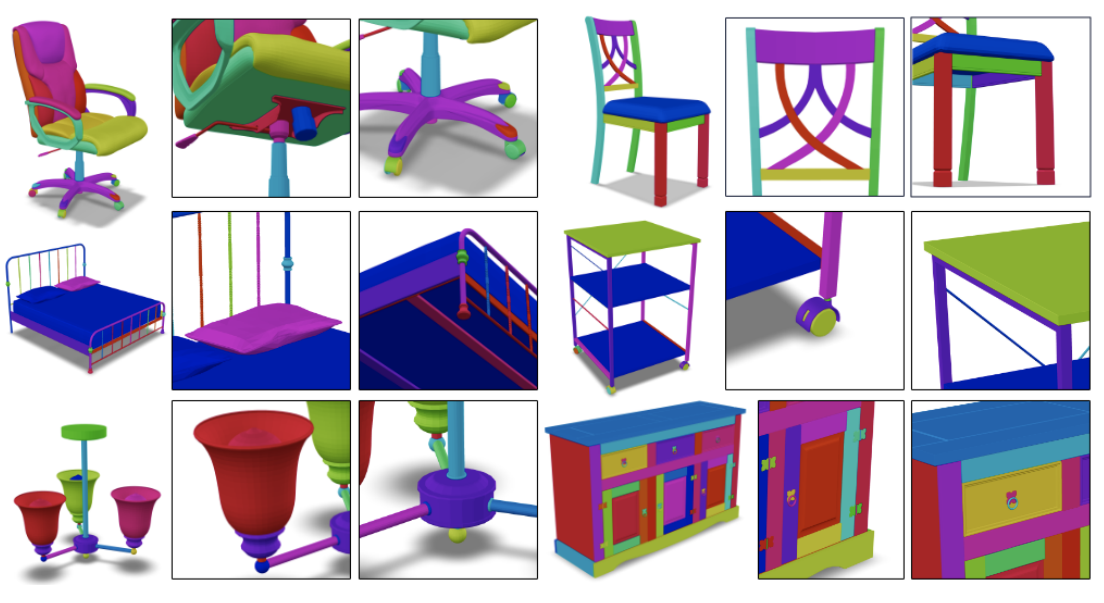

Fenggen Yu, Yiming Qian, Francisca Gil-Ureta, Brian Jackson, Eric Bennett, and Hao(Richard) Zhang.

HAL3D: Hierarchical Active Learning for Fine-Grained 3D Part Labeling.

[Paper], Accepted to [ICCV 2023].

We present the first active learning tool for fine-grained 3D part labeling, a problem which challenges even the most advanced deep learning (DL) methods due to the significant structural variations among the small and intricate parts.

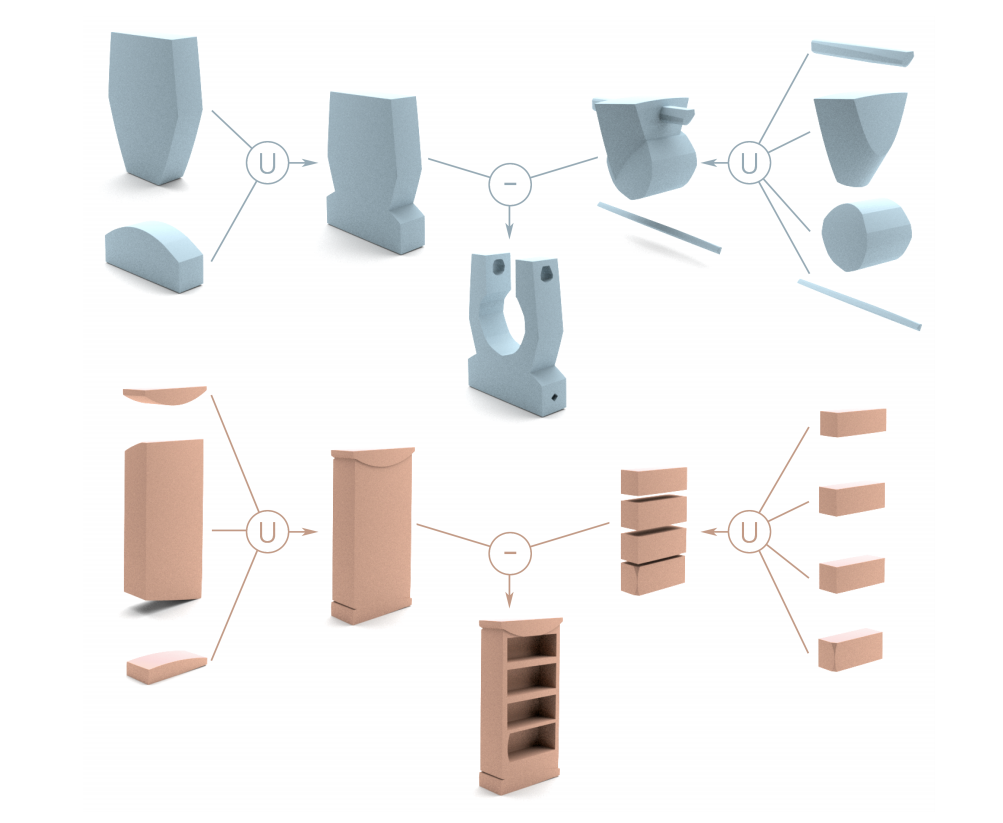

Fenggen Yu, Zhiqin Chen, Manyi Li, Aditya Sanghi, Hooman Shayani, Ali Mahdavi-Amiri and Hao(Richard) Zhang.

CAPRI-Net: Learning Compact CAD Shapes with Adaptive Primitive Assembly.

[Paper], [Project Page], Accepted to [CVPR 2022]

We introduce CAPRI-Net, a neural network for learning compact and interpretable implicit representations of 3D computer-aided design (CAD) models, in the form of adaptive primitive assemblies.

Jiongchao Jin, Arezou Fatemi, Wallace Lira, Fenggen Yu, Biao Leng, Rui Ma, Ali Mahdavi-Amiri and Hao(Richard) Zhang.

RaidaR: A Rich Annotated Image Dataset of Rainy Street Scenes.

[Paper], Second ICCV Workshop on Autonomous Vehicle Vision (AVVision), 2021

We introduce RaidaR, a rich annotated image dataset of rainy street scenes, to support autonomous driving research. The new dataset contains the largest number of rainy images (58,542) to date, 5,000 of which provide semantic segmentations and 3,658 provide object instance segmentations.

Ali Mahdavi-Amiri, Fenggen Yu, Haisen Zhao, Adriana Schulz and Hao(Richard) Zhang.

VDAC: Volume Decompose-and-Carve for Subtractive Manufacturing.

Accepted to SIGGRAPH ASIA 2020|[Paper]|[Project page]

We introduce carvable volume decomposition for efficient 3-axis CNC machining of 3D freeform objects, where our goal is to develop a fully automatic method to jointly optimize setup and path planning.

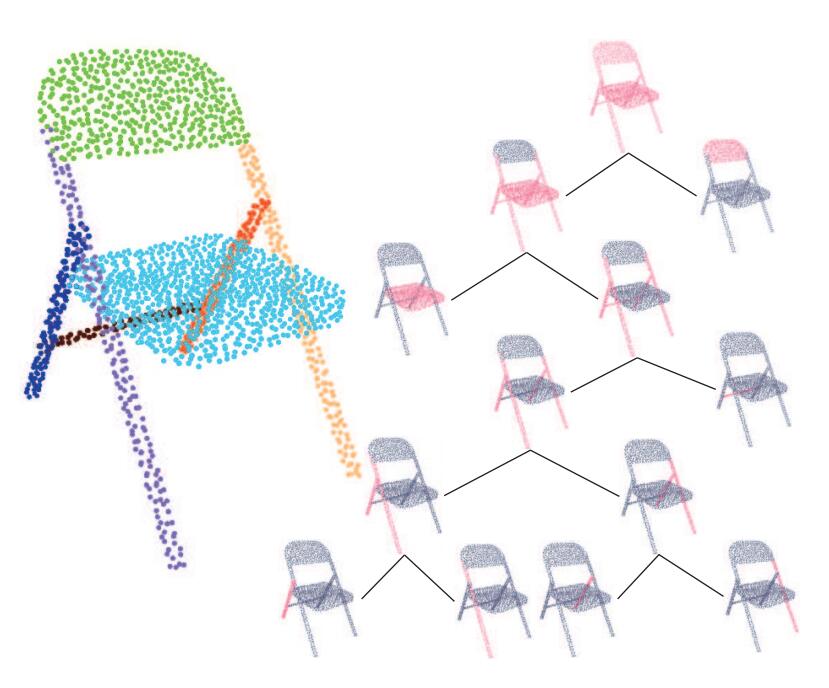

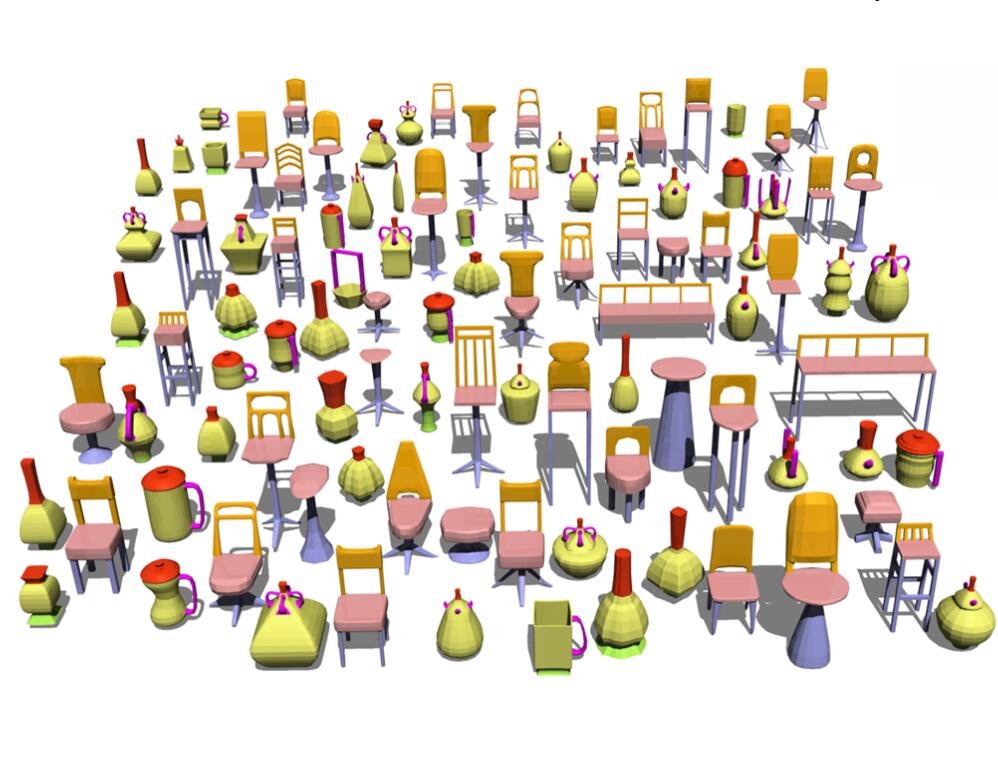

Fenggen Yu, Kun Liu, Yan Zhang, Chenyang Zhu, Kai Xu.

PartNet: A Recursive Part Decomposition Network for Fine-grained and Hierarchical Shape Segmentation.

Accepted to CVPR 2019|[Paper]|[Code & data]

Deep learning approaches to 3D shape segmentation are typically formulated as a multi-class labeling problem. Existing models are trained for a fixed set of labels, which greatly limits their flexibility and adaptivity. We opt for topdown recursive decomposition and develop the first deep learning model for hierarchical segmentation of 3D shapes, based on recursive neural networks.

Fenggen Yu, Yan Zhang, Kai Xu, Ali Mahdavi-Amiri and Hao(Richard) Zhang.

Semi-Supervised Co-Analysis of 3D Shape Styles from Projected Lines.

Accepted to ACM Transactions on Graphics (to be presented at SIGGRAPH 2018), 37(2)|[Paper]|[Code & data].

We present a semi-supervised co-analysis method for learning 3D shape styles from projected feature lines, achieving style patch localization with only weak supervision. Given a collection of 3D shapes spanning multiple object categories and styles, we perform style co-analysis over projected feature lines of each 3D shape and then backproject the learned style features onto the 3D shapes.

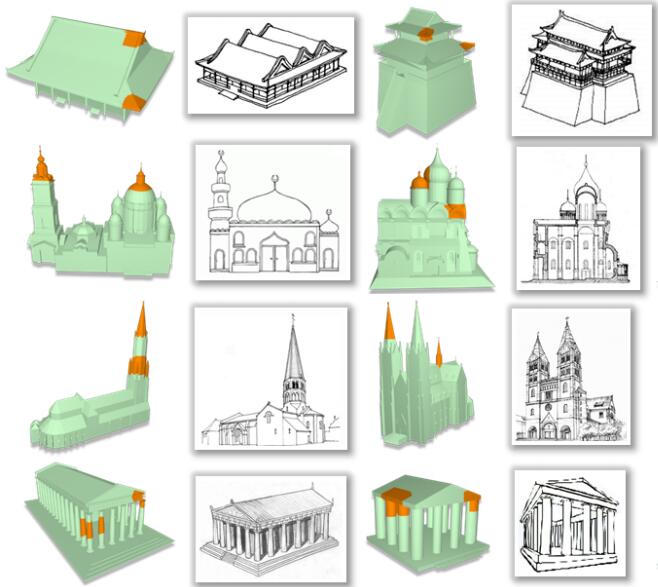

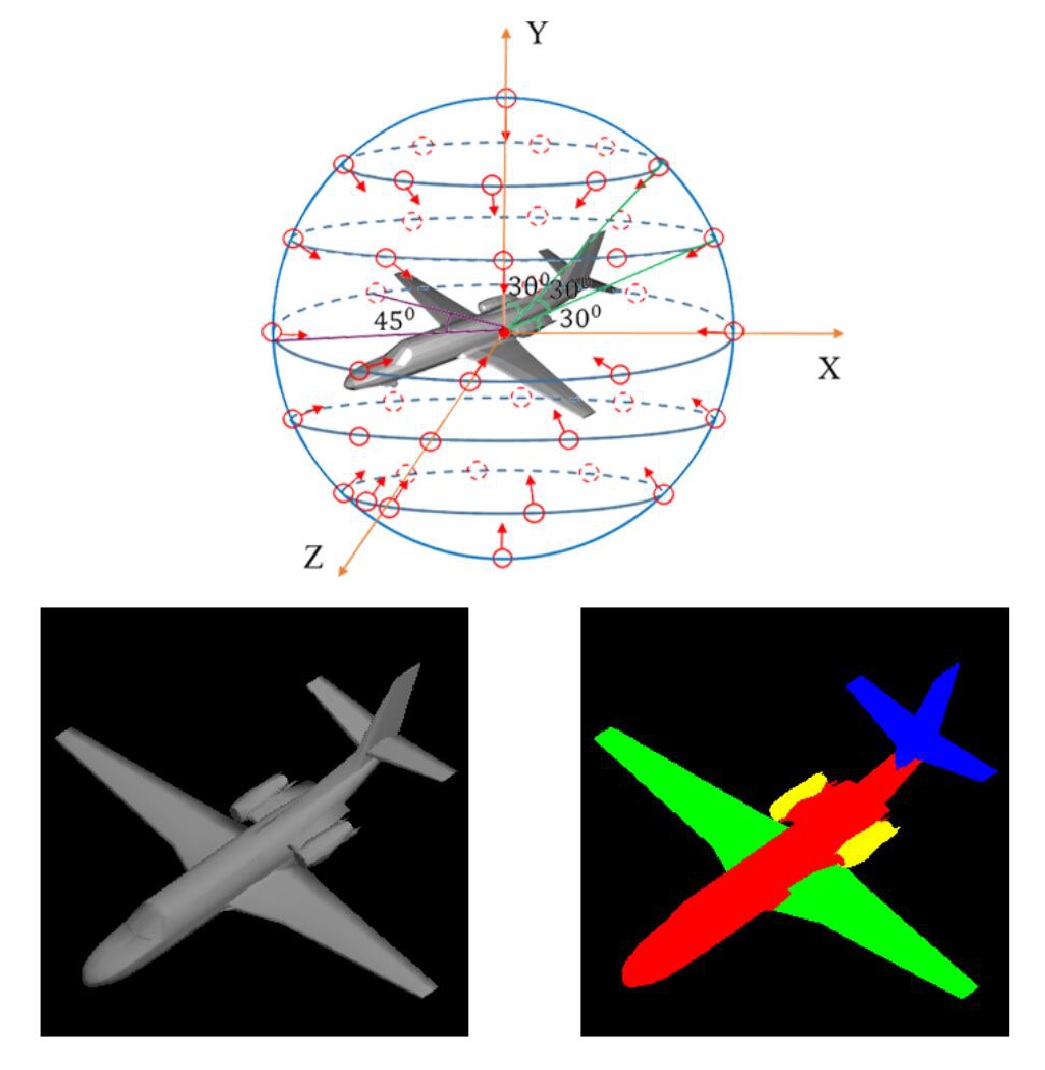

PanPan Shui, Pengyu Wang, Fenggen yu, Bingyang Hu, Yuan Gan, Kun Liu, Yan Zhang.

3D Shape Segmentation Based on Viewpoint Entropy and Projective Fully Convolutional Networks Fusing Multi-view Features.

Accepted to ICPR 2018|[Paper].

This paper introduces an architecture for segmenting 3D shapes into labeled semantic parts. Our architecture combines viewpoint selection method based on viewpoint entropy, multi-view image-based Fully Convolutional Networks (FCNs) and graph cuts optimization method to yield coherent segmentation of 3D shapes.

Pengyu Wang*, Yuan Gan*, Panpan Shui, Fenggen Yu, Yan Zhang, Songle Chen, Zhengxing Sun.

3D Shape Segmentation via Shape Fully Convolutional Networks.

Accepted to Computer & Graphics, Vol 70, Feb 2018.|[Paper]|[Code & data].

We design a novel fully convolutional network architecture for shapes, denoted by Shape Fully Convolutional Networks (SFCN). 3D shapes are represented as graph structures in the SFCN architecture, based on novel graph convolution and pooling operations, which are similar to convolution and pooling operations used on images.